Chahat Deep Singh

Ph.D. Dissertation Defense

Minimal Perception: Enabling Robot Autonomy on Resource-Constrained Robots

Monday June 26th, 2023

3:00 p.m. EST (9:00 pm CET)

IRB 3137

Zoom Link

Tuesday June 27th, 2023 | 12:30 am IST

Add to Calender

Add to Calender

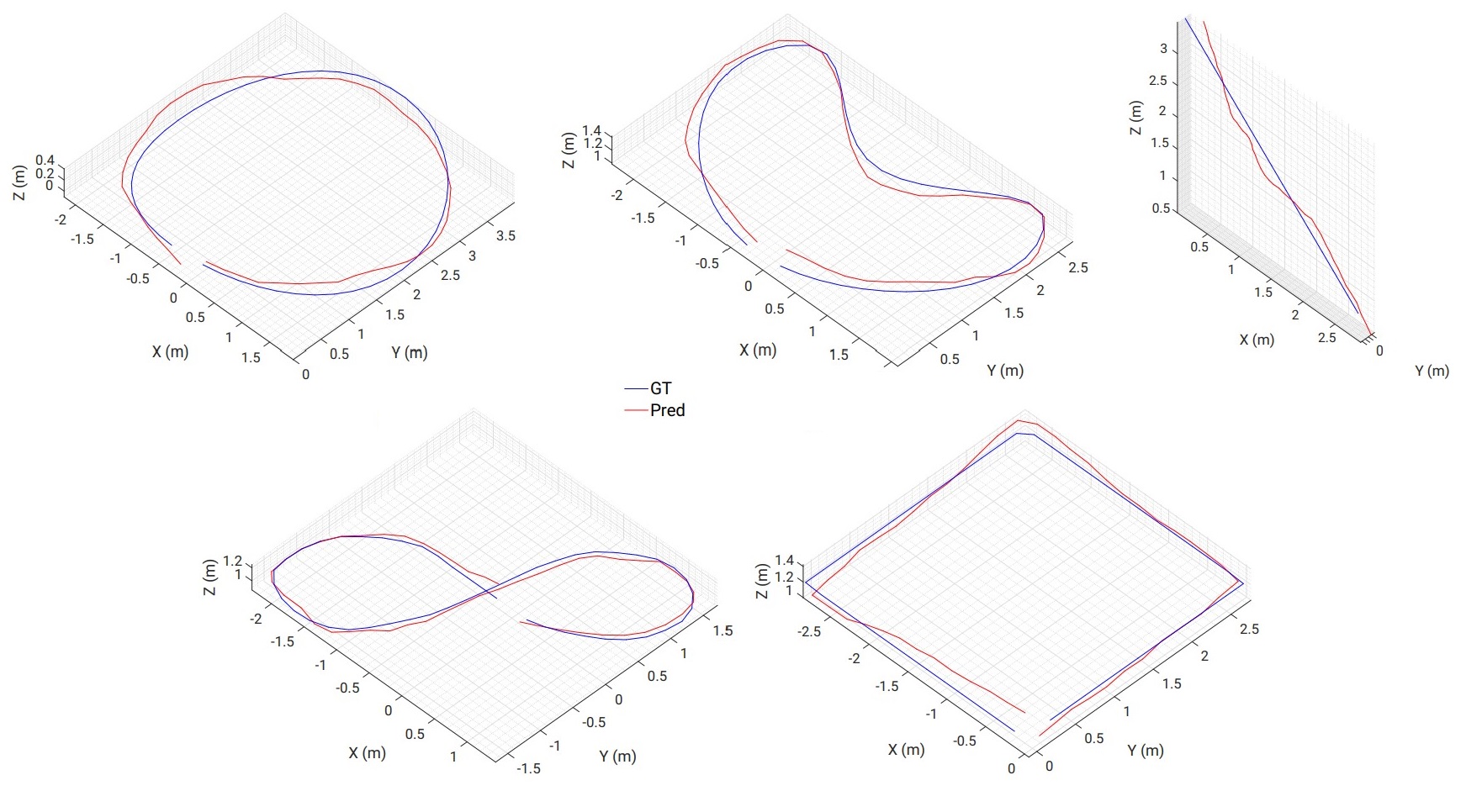

Abstract: Mobile robots are widely used and crucial in diverse fields due to their autonomous task performance. They enhance efficiency, and safety, and enable novel applications like precision agriculture, environmental monitoring, disaster management, and inspection. Perception plays a vital role in their autonomous behavior for environmental understanding and interaction. Perception in robots refers to their ability to gather, process, and interpret environmental data, enabling autonomous interactions. It facilitates navigation, object identification, and real-time reactions. By integrating perception, robots achieve onboard autonomy, operating without constant human intervention, even in remote or hazardous areas. This enhances adaptability and scalability. This thesis explores the challenge of developing autonomous systems for smaller robots used in precise tasks like confined space inspections and robot pollination. These robots face limitations in real-time perception due to computing, power, and sensing constraints. To address this, we draw inspiration from small organisms such as insects and hummingbirds, known for their sophisticated perception, navigation, and survival abilities despite their minimalistic sensory and neural systems. This research aims to provide insights into designing compact, efficient, and minimal perception systems for tiny autonomous robots. Embracing this minimalism is paramount in unlocking the full potential of tiny robots and enhancing their perception systems. By streamlining and simplifying their design and functionality, these compact robots can maximize efficiency and overcome limitations imposed by size constraints. In this work, I propose a Minimal Perception framework that enables onboard autonomy in resource-constrained robots at scales (as small as a credit card) that were not possible before. Minimal perception refers to a simplified, efficient, and \textit{selective} approach from both hardware and software perspectives to gather and process sensory information. Adopting a task-centric perspective allows for further refinement of the minimalist perception framework for tiny robots. For instance, certain animals like jumping spiders, measuring just 1/2 inch in length, demonstrate minimal perception capabilities through sparse vision facilitated by multiple eyes, enabling them to efficiently perceive their surroundings and capture prey with remarkable agility. The contributions of this work can be summarized as follows:

Bio: Chahat Deep Singh is a fifth-year Ph.D. candidate in the Perception and Robotics Group (PRG) with Professor Yiannis Aloimonos and Associate Research Scientist Cornelia Fermüller. He graduated with Master in Robotics at the University of Maryland in 2018. Later, he joined as a Ph.D. student in the Department of Computer Science. Singh’s research focuses on developing bio-inspired minimalist cognitive architectures to enable onboard autonomy on robots that are as small as a credit card. He was awarded Ann G. Wylie Fellowship for outstanding dissertation for the year 2022-2023, Future Faculty Fellowship 2022-2023 and UMD's Dean Fellowship in 2020. Recently, his work was featured in BBC, IEEE Spectrum, Voice of America, NVIDIA, Futurism and much more. He has been serving as the PRG Seminar Series organizer since 2018 and has served as Maryland Robotics Center Student Ambassador from 2021 to 2023. Chahat is also a reviewer for RA-L, T-ASE, CVPR, ICRA, IROS, ICCV, RSS among other top journals and conferences. Chahat will join UMD as a Postdoctoral Associate at Maryland Robotics Center in July 2023 under the supervision of Prof. Yiannis Aloimonos and Prof. Pratap Tokekar. For more, please visit here.

Examining Committee:

Dr. Yiannis Aloimonos (Chair)

Dr. Inderjit Chopra (Department Representative)

Dr. Guido de Croon (TU Delft)

Dr. Christopher Metzler

Dr. Nitin J. Sanket

Dr. Cornelia Fermüller