MIT Seminar, Spring 2023

Minimal Perception: A Vision for the Future of Tiny Autonomous Robot

Friday March 24th, 2023

10:30 a.m. EST

Room 33-419

Massachusetts Institute of Technology

Invited by Luca Carlone

Abstract:

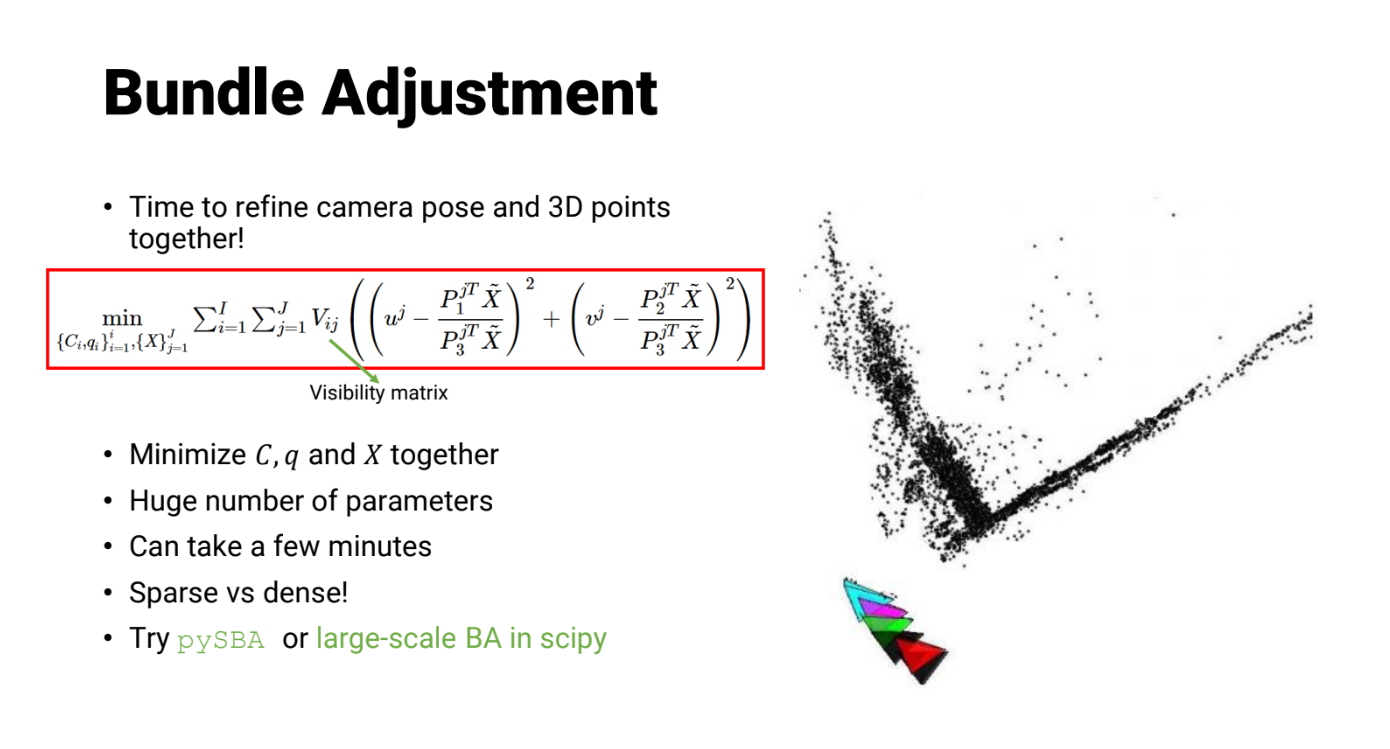

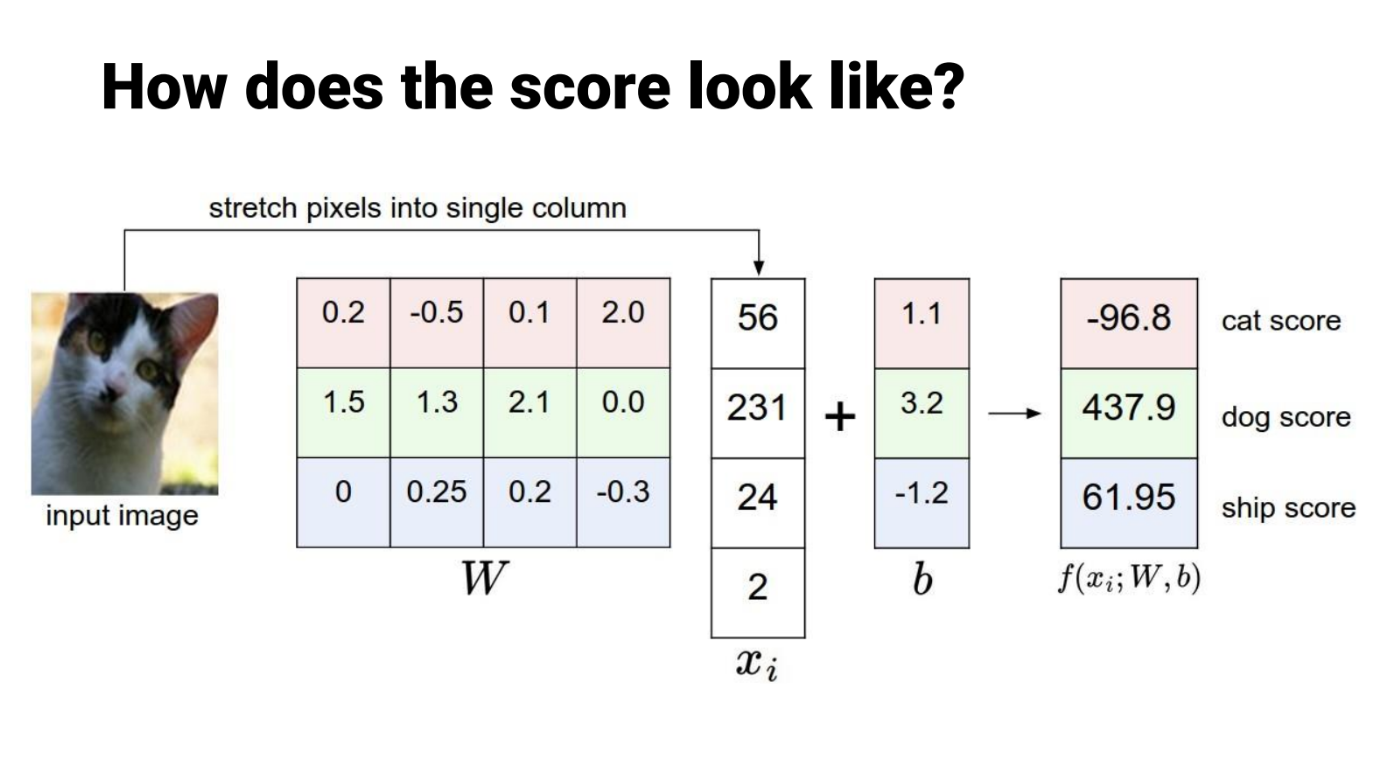

Although autonomous agents are inherently built for a myriad of applications, their perceptual systems are designed with a single line of thought. Utilizing these traditional methods on resource-constraint and/or small-scale robots (less than 3 inches long) is highly inefficient as these algorithms are generic and not parsimonious. In stark contrast, the perceptual systems in biological beings have evolved to be highly efficient based on their natural habitat and their day-to-day tasks. This talk deals with drawing inspiration from nature to build a minimalist perception framework for tiny robots using only onboard sensing and computation at scales that were never thought possible before. The solution to minimal perception in robots at such scales lies at the intersection of AI, computer vision and computational imaging. This talk will discuss how modifying the sensor suite and learning structures/geometry of the scene rather than textures can result in smaller and faster depth prediction models. Reimagining the perception system from the ground up based on a class of tasks holds the key to minimalism that leads to a set of strong constraints, aiding in tackling the problem of autonomy on tiny robots.